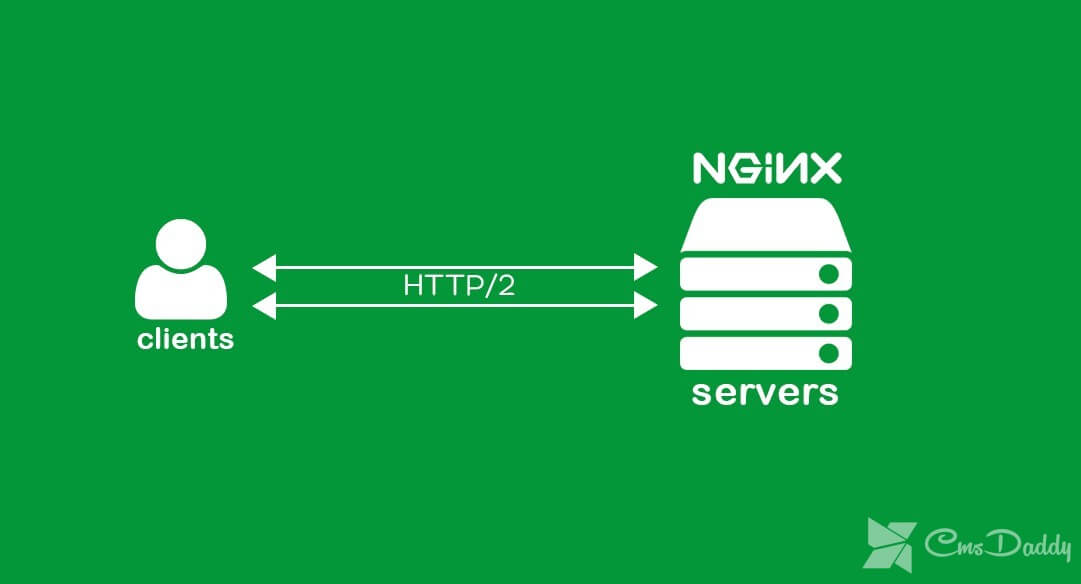

Nginx proxy requests using proxy_pass

I want to talk about one useful feature of Nginx, which I regularly use in my business. It will be about setting up proxying requests to a remote server using Nginx and the proxy_pass directive. I will give examples of various settings and tell you where I use this module of a popular webserver myself.

I will tell you a little in my own words about how the ngx_http_proxy_module module works. It is he who implements all the functionality that will be discussed. Suppose you have any services on a local or virtual network that do not have direct access to the Internet. And you want to have one. You can forward the necessary ports on the gateway, you can think of something else. And the easiest way to do it is to configure a single entry point to all your services in the form of an Nginx server and from it to proxy various requests to the necessary servers.

I'll tell you specific examples where I use it. For clarity and simplicity, I will directly list these options in order:

- Earlier, I talked about setting up chat servers — the matrix and mattermost. In these articles, I just talked about how to proxy requests to the chat using nginx. Walked on the topic in passing, without stopping in detail. The bottom line is that you configure these chats on any virtual server, place them in closed network perimeters without unnecessary access, and just proxy requests to these servers. They go through nginx, which you have on the external Internet, and accept all incoming connections.

- Suppose you have a large server with many containers, such as a docker. It runs many different services. You install another container with pure nginx, on it configure the proxying of requests for these containers. Containers themselves only map to the local server interface. Thus, they will be completely closed from the outside, and at the same time, you can flexibly control access.

- Another popular example. Suppose you have a server with a proxmox hypervisor or any other. You configure a gateway on one of the virtual machines, create a local network only from virtual machines without access to it from the outside. Do in this local network for all virtual machines the default gateway in the form of your virtual machine with a gateway. On virtual servers in the local network, place various services and do not bother with the firewall settings on them. Their entire network is still not accessible from the Internet. And you access the services with nginx installed on the gateway or on a separate virtual machine with ports forwarded to it.

- My personal example. I have a Synology server at home. I want to organize a simple https access from a browser by the domain name. There is nothing easier. I set up on the nginx server getting a free certificate Letsencrypt, I set up proxying requests to my home IP, there I do a pass-through on the gateway inside the local network to the Synology server. At the same time, I can firewall to restrict access to the server to only one IP on which nginx is running. As a result, at the very Synology nothing to do at all. He does not even know what they are accessing via https, the standard port 443.

- Suppose you have a large project, divided into components that live on different servers. For example, a forum lives on a separate server, along with the path/forum from the main domain. You just take and configure the proxying of all requests at the address/forum to a separate server. In the same way, without any problems, all pictures can be transferred to another server and proxied to the requests. That is, you can create any location and redirect requests to it to other servers.

I hope in general, it is clear what is at stake. There are many uses. I brought the most common, which came to my head and which I use myself. Among the advantages that I consider to be the most useful form my own cases, I would note 2

- You can easily configure https access to services, while not touching these services. You receive and use certificates on the nginx server, use the https connection with it, and nginx itself already sends information to the server with services that can work via the usual http and do not know about https.

- You can easily change the addresses to which you are requesting requests. Suppose you have a website, its requests are proxied to a separate server. You have prepared an update or relocation site. We debug everything on the new server. Now it’s enough for you on the nginx server to change the address of the old server to the new one where the requests will be redirected. And that's all. If something goes wrong, you can quickly return it all back.

With theory finished. We now turn to examples of settings. In my examples I will use the following notation: blog.cmsdaddy.com test site domain name nginx_srv external server name with nginx installed blog_srv local server with a site where we proxied connections 84.152.241.248 external ip nginx_srv 192.168.63.37 ip address blog_srv 79.38.244.138 ip address of the client from which I will go to the site

Setting proxy_pass in nginx

Consider the simplest example. I will use my technical domain cmsdaddy.com in this and subsequent examples. Suppose we have a website blog.cmsdaddy.com In DNS, an A record is created indicating the IP address of the server where nginx is installed - nginx_srv. We will proxy all requests from this server to another server in the local network blog_srv, where the site is actually located. We draw a config for the server section. server { listen 80; server_name blog.cmsdaddy.com; access_log /var/log/nginx/blog.cmsdaddy.com-access.log; error_log /var/log/nginx/blog.cmsdaddy.com-error.log;

location / {

proxy_pass http://192.168.63.37;

proxy_set_header Host $ host;

proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $ remote_addr;

}

}

Go to the address https://blog.cmsdaddy.com We have to get on blog_srv, where some web servers should work too. In my case, it will be nginx too. You should have content similar to what you see by typing http://192.168.63.37 in the local network. If something does not work, then first check that at the address of the proxy_pass directive everything is working correctly for you.

Let's look at logs on both servers. On nginx_srv I see my query:

79.38.244.138 - - [19 / Jan / 2018: 15: 15: 40 +0300] "GET / HTTP / 1.1" 304 0 "-" "Mozilla / 5.0 (Windows NT 10.0; WOW64; Trident / 7.0; rv: 11.0 ) like Gecko "

Checking blog_srv:

84.152.241.248 - - [19 / Jan / 2018: 15: 15: 40 +0300] "GET / HTTP / 1.0" 304 0 "-" "Mozilla / 5.0 (Windows NT 10.0; WOW64; Trident / 7.0; rv: 11.0 ) like Gecko "" 79.38.244.138 "

As we see, the request first came to nginx_srv, was forwarded to blog_srv, where it came already with the address of the sender 84.152.241.248. This is the address of nginx_srv. We see the real IP address of the client only at the very end of the log. This is inconvenient since the PHP directive REMOTE_ADDR will not return the real IP address of the client. And he is often needed. We will fix this further, but for now, we will create a test page in the root of the site on chat_srv to check the client’s IP address as follows:

<? php echo $ _SERVER ['REMOTE_ADDR'] ?>

Let's call it myip.php. Let's go to http://blog.cmsdaddy.com/myip.php and check how the server determines our address. It does not detect it. It will show the nginx_srv address. We fix this and teach nginx to transfer the real IP address of the client to the server.

Transfer of real ip (real ip) client address in nginx with proxy_pass

In the previous example, we actually send the real ip address of the client using the proxy_set_header directive, which adds the real ip address of the client to the X-Real-IP header. Now we need on the receiving side, that is, blog_srv, to do a reverse replacement - replace the information on the sender's address with the one specified in the X-Real-IP header. Add the following parameters to the server section: set_real_ip_from 84.152.241.248; real_ip_header X-Real-IP;The server section on blog_srv in its simplest form is obtained as follows: server { listen 80 default_server; server_name blog.cmsdaddy.com; root / usr / share / nginx / html; set_real_ip_from 84.152.241.248; real_ip_header X-Real-IP;

location / {

index index.php index.html index.htm;

try_files $ uri $ uri / = 404;

}

location ~ \ .php $ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_intercept_errors on;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $ document_root $ fastcgi_script_name;

fastcgi_ignore_client_abort off;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

We save the config, reread it and check it again https://blog.cmsdaddy.com/myip.php. You should see your real ip address. You will see it in the web server's log on blog_srv.

Further, we will consider more difficult configurations.

Transfer https via nginx using a proxy pass

If your site works on https, then it is enough to configure SSL only on nginx_srv, if you are not worried about transferring information from nginx_srv to blog_srv. It can be done over an unprotected protocol. I will consider an example with the free certificate let's encrypt. This is just one of the cases when I use proxy_pass. It is very convenient to set up on one server the automatic receipt of all necessary certificates. In detail let's configure encrypt I considered separately. Now we will assume that you have a certbot and everything is ready for a new certificate, which will then be automatically updated.

To do this, we need to add another location to / nginx_srv - /.well-known/acme-challenge/. The full server section of our test site at the time of receiving the certificate will look like this:

server { listen 80; server_name blog.cmsdaddy.com; access_log /var/log/nginx/blog.cmsdaddy.com-access.log; error_log /var/log/nginx/blog.cmsdaddy.com-error.log; location /.well-known/acme-challenge/ { root /web/sites/blog.cmsdaddy.com/www/; } location / { proxy_pass http://192.168.63.37; proxy_set_header Host $ host; proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for; proxy_set_header X-Real-IP $ remote_addr; } }

Reread the nginx config and get the certificate. After that the config changes to the following:

server { listen 80; server_name blog.cmsdaddy.com; access_log /var/log/nginx/blog.cmsdaddy.com-access.log; error_log /var/log/nginx/blog.cmsdaddy.com-error.log; return 301 https: // $ server_name $ request_uri; # redirect normal https requests } server { listen 443 ssl http2; server_name blog.cmsdaddy.com; access_log /var/log/nginx/blog.cmsdaddy.com-ssl-access.log; error_log /var/log/nginx/blog.cmsdaddy.com-ssl-error.log; ssl on; ssl_certificate /etc/letsencrypt/live/blog.cmsdaddy.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/blog.cmsdaddy.com/privkey.pem; ssl_session_timeout 5m; ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_dhparam /etc/ssl/certs/dhparam.pem; ssl_ciphers 'EECDH + AESGCM: EDH + AESGCM: AES256 + EECDH: AES256 + EDH'; ssl_prefer_server_ciphers on; ssl_session_cache shared: SSL: 10m; location /.well-known/acme-challenge/ { root /web/sites/blog.cmsdaddy.com/www/; } location / { proxy_pass http://192.168.63.37; proxy_set_header Host $ host; proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for; proxy_set_header X-Real-IP $ remote_addr; } }

Our site works on https, despite the fact that we did not touch the server where this site is located. Specifically, with a website, this may not be so relevant, but if you do not proxy requests to a regular site, but to some non-standard service that is difficult to translate into https, this can be a good solution.

Consider another example. Suppose you have a forum lives in the directory http://blog.cmsdaddy.com/forum/, you want to bring the forum to a separate webserver to increase speed. To do this, add another location to the previous configuration.

location / forum / { proxy_pass http://192.168.63.37; proxy_set_header Host $ host; proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for; proxy_set_header X-Real-IP $ remote_addr; proxy_redirect default; }

Another popular solution. You can give pictures from one server, and everything else from another. In my example, the pictures will live on the same server, where nginx, and the rest of the site on another server. Then we should have approximately this configuration of locations.

location / { proxy_pass http://192.168.63.37; proxy_set_header Host $ host; proxy_set_header X-Forwarded-For $ proxy_add_x_forwarded_for; proxy_set_header X-Real-IP $ remote_addr; } location ~ \. (gif | jpg | png) $ { root /web/sites/blog.cmsdaddy.com/www/images; }

In order for all this to work correctly, it is necessary that the site itself is able to correctly place its images. Options to organize this set. You can either install network packs on the server in various ways, or programmers can change the code to control the placement of images. In any case, this is an integrated approach to working with the site.

There are a lot of guidelines for managing proxies. All of them are described in the corresponding nginx documentation. I am not a great specialist in configuring nginx. Basically, I use my ready-made configs, often without even going into the essence, if you can immediately solve the problem. I peep something from others, write to myself, try to figure it out.

Special attention should be paid to the proxy_cache caching directives if there is a need for this. You can significantly increase the response of the website if appropriately adjust the return of the cache. But this is a delicate moment and needs to be configured in each case separately. There are no ready-made recipes.

Conclusion

Did not like the article and want to teach me to administer it? Please, I love to study. Comments at your disposal. Tell me how to do it right!

I have it all. I did not consider another possible option when you proxy the https site and transfer information to the backend via https too. There is no ready-made example at hand to check, and in absentia did not begin to draw the config. In theory, there is nothing difficult in this, configure nginx on both servers with the same certificate. But for sure I will not say that everything will work. Perhaps there are some nuances with such proxying. I usually don't have to do that.

As I wrote at the beginning, I mainly proxy requests from one external webserver to a closed network perimeter, where no one can access them. In this case, I do not need to use https when sending requests to the backend. There will not necessarily be a separate server as a backend. It can easily be a container on the same server.